Societal and Environmental Wellbeing

Contents

Societal and Environmental Wellbeing#

In Brief#

Sustainability is an ethical aspect studied in the TAILOR project. Sustainability in AI refers to the application of AI technology (from the power consumption of the hardware to the processing and storage of a very large dataset) while considering problems related to sustainable development. The sustainability in AI can be divided into two branches that should be addressed: AI for Sustainability and Sustainability of AI [1]. The first branch is the most developed branch whose aim is to achieve the United Nations Sustainable Development Goals (SDGs) and where not-for-profit organisation such as AI4Good or AI for Climate are active to address the scope. In this branch we consider AI applications whose objective is to use the algorithm for environmental purposes such as reducing the energy cost of large data centres or better predicting the weather forecast to maximise renewable energy production. The second branch regards the sustainable development of AI/ML itself, concerning how to measure the sustainability of developing and utilising AI models such as the computational cost of training AI algorithms or the amount of CO2 emission.

Abstract#

In this part we will cover the main elements that define sustainability in AI systems. Some of them are related to the aspects of AI for sustainability and can be common to computer systems in general, others are related to the sustainability of AI. We will focus on sustainability in AI with more attention to new AI-specific issues and challenges because they are less covered in the literature. We include a taxonomic organisation of terms in the area of AI sustainability and their definition.

Motivation and Background#

Given the increasing capabilities and widespread use of artificial intelligence, there is a growing concern about its impact on the environment related to the carbon footprints and the power consumption needed for training, store and developing AI models and algorithms. There is a wide literature regarding the dangers of climate change and the need of modifying the habits of use of the technology by consumers and industries. Plans such as the European Green Deal promulgated by the European Commission has the aim to tackle climate change. AI has the potential to accelerate the efforts of protecting the planet with many applications such as the use of machine learning to optimise the energy consumption efficiency, reducing the CO2 emission, monitoring quality of the air, the water, the biodiversity changes, the vegetation, the forest cover, and preventing natural disasters.

Guidelines#

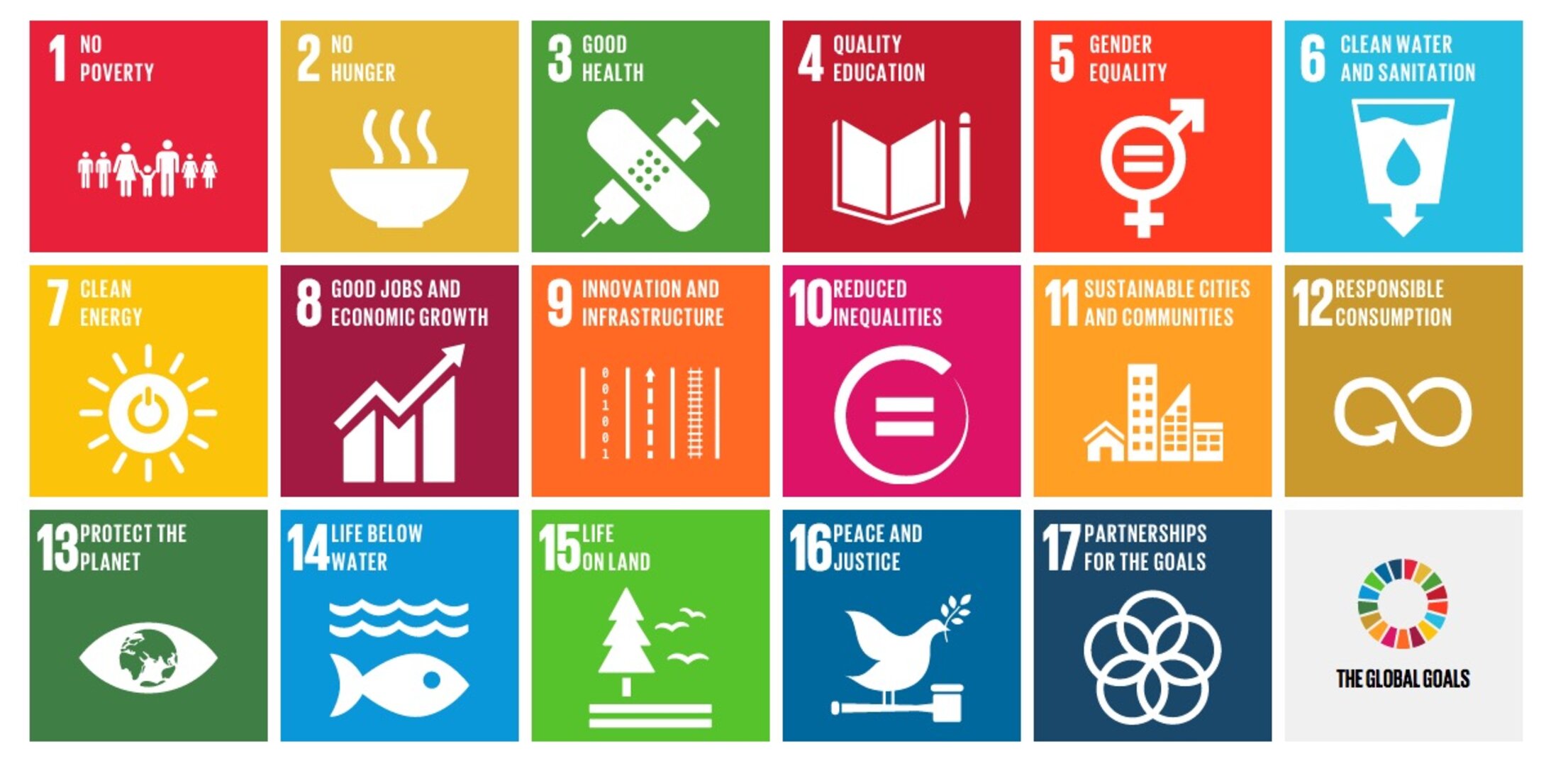

The guidelines include common elements that should be considered in the design and build of a piece of technology while using ML and other emerging technologies. In 2015 the United Nations General Assembly set up the Sustainable Development Goals, a list of 17 interlinked global goals that are intended to be achieved by the year 2030. Not-for-profit organisations such as AI4Good also described a list of guidelines like “Guidelines on the Implementation of Eco-friendly Criteria for AI and other Emerging Technologies” and “Guidelines on the environmental efficiency of machine learning processes in supply chain management” (both guidelines can be found here) with the scope of addressing very important aspects on the use of AI and its development.

Taxonomic Organisation of Terms#

The Sustainable Development Goals (SDGs) are a universal call to action to end poverty, protect the planet, and ensure that by 2030 all people enjoy peace and prosperity. They were adopted by the United Nations in 2015 as. They are divided into 17 goals -they recognize that action in one area will affect outcomes in others and that development must balance social, economic and environmental sustainability (see here for further details).

AI is involved in many of these 17 Global Goals, including Sustainable cities and communities and Responsible consumption and production with areas like production optimization, smart cities and green computing.

Main Keywords#

Sustainable AI: The goal of Sustainable AI is to reduce energy consumption for a more sustainable AI and also find a way in which AI could contribute to solve some of the big sustainability challenges that face humanity today (e.g., climate change).

Green AI: The goal of Green AI (also known as Green IT, Green Computing, or ICT Sustainability) is to minimise the negative aspects of IT operations on the environment. To do so, computers and IT products can be designed, manufactured and disposed of in an environmentally-friendly manner.

Power-aware Computing: Power-aware computing (also called Energy-aware Computing and Energy-efficient Computing) is part of the Green IT. Power-aware design strategies strive to maximise performance in high-performance systems within power dissipation and power consumption constraints. Reduced power utilisation on a node is one way to reduce the amount of energy required to compute. Lowering the frequency at which the CPU works on one approach to do this. Reduced clock speed, on the other hand, increases the time to solution, posing a potential compromise.

Cloud Computing: Cloud computing (or Mesh computing) is the provision of computing resources (storage and processing power) on demand, without direct user intervention. A large cloud often includes multiple data centres, each housing a different set of functions. The cloud computing model aims to achieve core economies of scale through sharing of resources, taking advantage of a “pay-as-you-go” model that can decrease capital expenditures, but can also result in unforeseen operating expenses for users who are unaware of the concept.

Edge Computing: Edge Computing (or Fog Computing) is a distributed computing paradigm in which processing and data storage are brought closer to the data sources. This should increase response times while also conserving bandwidth. Rather than referring to a single technology, the phrase refers to an architecture.

Data Centre: A data centre is a structure, a specialised area inside a structure, or a collection of structures used to house computer systems and related components such as telecommunications and storage systems. Because IT operations are so important for business continuity, they usually incorporate redundant or backup components and infrastructure for power, data transmission connections, environmental control (such as air conditioning and fire suppression), and other security systems. A huge data centre is a large-scale activity that consumes the same amount of power as a small town.

Cradle-to-cradle Design: Cradle-to-cradle Design (also known as 2CC2, C2C, cradle 2 cradle, or regenerative design) is a biomimetic approach to product and system design that mimics natural processes, in which materials are considered as nutrients flowing in healthy, safe metabolisms.

Resource Prediction: Resource prediction (also called Workload Prediction or Workload Forecast) is the estimation of the resources a customer will require in the future to complete his tasks. This concept has a wide variety of application and it is particularly studied in the context of data centres management. When these forecasts are generated, historical and current data are utilised to predict how many resource units, which tools and operative systems and the number of requests are required to accomplish a task.

Resource Allocation: Cloud computing provides a computing environment where businesses, clients, and projects can lease resources on demand. Both cloud users and providers want to allocate cloud resources efficiently and profitably. These resources are typically scarce, therefore cloud providers must make the best use of them while staying within the confines of the cloud environment and meeting the demands of cloud apps so that they may perform their jobs. The distribution of resources is one of the most important aspects of cloud computing. Its efficiency has a direct impact on the overall performance of the cloud environment. Cost efficiency, reaction time, reallocation, computing performance, and job scheduling are all key difficulties in resource allocation. Cloud computing users want to do task for the least amount of money feasible.

Social Impact of AI Systems: Artificial intelligence (AI) is quickly changing the way we work and the way we live. Indeed, the societal impact of AI is on most people’s minds.

AI human interaction: As AI develops to learn and adapt, it is increasingly being perceived as human-like in both its appearance and intelligence. This ability to imitate human behavior and interactions creates anthropomorphic cues which cause users to form emotional bonds [1]. As AI becomes more commonplace in our daily lives, being integrated into virtual assistants, recommender systems etc. it is important to assess how the system’s anthropomorphic cues affect our interaction with it. In addition, potential risks resulting from AI-human interaction should be assessed prior to any implementation of AI as previous research has demonstrated the unpredictability of AI.

Self-identification of AI: AI systems which are presented to be both high in intelligence and have a good quality design tend to cause humans to anthropomorphize the system; i.e. assign human-like traits to it which can lead to emotional bonding with the system [1]. This is dangerous, especially for vulnerable groups such as older generations or those less acquainted with the mechanisms behind the AI. In addition to the threat of emotional attachment, AI systems have been found to frequently provide false or partially false information and portrait it as fact, due to a phenomenon referred to as AI hallucinations. As such it is important that users can clearly identify an artificial intelligence as such, in order to understand the system’s limitations and prevent emotional attachment and misinformation. The remaining part of this chapter will first explain which AI systems are required to be clearly identifiable and then elaborate on how these criteria should be met.

Emotional Impact: Due to the fast development of AI systems, their behavior, in some aspects, has become akin to humans. Especially with the introduction of large language models like chatGPT, it has become increasingly difficult to differentiate human and AI generated language. When coupled with human-like design, this cognitive ability has been found to lead to emotional attachment by users. While emotional impact cannot be prevented entirely, it is important to assess the extent to which the AI encourages human attachment and ensure clear signaling to the end-user that they are interacting with an AI.

AI Impact on the Workforce: The impact of Artificial Intelligence on the workforce can be considered from several perspectives. The most common consideration is the loss of jobs or possibility of deskilling of the workforce due to the introduction of AI systems. A second consideration is the increased demand for workers with a computer-science skill set in order to operate and maintain implemented AI systems. A final consideration is the effect of AI-triggered changes for stakeholders. Prior to the introduction of an AI system, risks and consequences in these areas should be assessed and steps to counter negative outcomes should be considered.

Society and Democracy: The rapid increase of technological development is impacting society in new and partially unpredictable ways. With AI being integrated into all facets of life, ranging from recommender systems for movies to automatic passport control and facial recognition in airports, it is important to understand the negative ramifications this technology may have on society and democracy as a whole. Previously, the development of technologies was frequently guided by the interests of tech giants with profit motivating research [2]. While this line of development has produced some impressive systems, there is little consideration for the protection of citizens and the regulation of these technologies for the greater societal good. In order to combat this trend, the European Union introduced the AI act, stipulating legislation for new AI systems depending on the level or risk posed by the system. However, with this increase in legislation, fears are rising about Europe falling behind other, non-legislated countries, in the development of new software. It is such that legislators need to find the balance between regulation and development, to protect democracy and society without adversely affecting the development of the European AI research sector.

AI for social scoring: Social Scoring is the concept that all daily actions taken by individuals are monitored and scored for their benefit to society as a whole. Based on this scoring system, you are assigned a given value which determines your access to education, healthcare and other public goods. AI lends itself to social scoring as it excels at facial recognition and movement identification. However this poses several technical and ethical problems. The obvious ethical issue is that the goodness of actions is subjective and the ability of individuals to access goods and services necessary for survival should not depend on their perceived ability to contribute to society. Furthermore, the technical issue with social scoring is that the quality of AI evaluation of movement hinges on the quality of its training data, with biased training data leading to biased evaluation by the AI. Social scoring has currently only been proposed by the Chinese government but other countries including the United Kingdom are using automatic facial recognition in surveillance tapes to search for fugitives.

AI for propaganda: With the onset of large language models, the mass production of text has become considerably cheaper and faster, requiring less human involvement. With the addition of automatic image generators such as Dall-E, the same is now possible for pictures. Previous work has found that GPT-3, the antecedent to ChatGPT is capable of creating text equally persuasive as content from existing covert propaganda [1]. This causes a rising concern regarding the ease at which a large number of influential texts can be circulated using online platforms. To combat mass-propaganda, it is important that online and offline platforms have effective controls to identify AI-generated materials in their publications.

Bibliography#

- 1

Aimee van Wynsberghe. Sustainable AI: AI for sustainability and the sustainability of AI. AI and Ethics, 1(3):213–218, 2021.

- 2

Department of Economic United Nations and Social Affair. Sustainable development. URL: https://sdgs.un.org/goals (visited on 2022-05-02).

- 3(1,2)

Joohee Kim and Il Im. Anthropomorphic response: understanding interactions between humans and artificial intelligence agents. Computers in Human Behavior, 139:107512, 2023.

- 4

Paul Nemitz. Constitutional democracy and technology in the age of artificial intelligence. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 376(2133):20180089, 2018.

- 5

Josh A Goldstein, Jason Chao, Shelby Grossman, Alex Stamos, and Michael Tomz. How persuasive is ai-generated propaganda? PNAS nexus, 3(2):pgae034, 2024.

Further Recommended Reading#

van Wynsberghe, Aimee. “Sustainable AI: AI for sustainability and the sustainability of AI.” AI and Ethics 1.3 (2021): 213-218.

Vinuesa, Ricardo, et al. “The role of artificial intelligence in achieving the Sustainable Development Goals.” Nature communications 11.1 (2020): 1-10.

Graybill, Robert, and Rami Melhem, eds. Power aware computing. Springer Science & Business Media, 2013.

Schoormann, Thorsten, et al. “Achieving Sustainability with Artificial Intelligence—A Survey of Information Systems Research.” (2021).

Khakurel, Jayden, et al. “The rise of artificial intelligence under the lens of sustainability.” Technologies 6.4 (2018): 100.

Nishant, Rohit, Mike Kennedy, and Jacqueline Corbett. “Artificial intelligence for sustainability: Challenges, opportunities, and a research agenda.” International Journal of Information Management 53 (2020): 102104.

Wu, Carole-Jean, et al. “Sustainable ai: Environmental implications, challenges and opportunities.” arXiv preprint arXiv:2111.00364 (2021).

Fisher, Douglas H. “Computing and AI for a Sustainable Future.” IEEE intelligent systems 26.6 (2011): 14-18.

Pedemonte, C. “AI for Sustainability: an overview of AI and the SDGs to contribute to European policy-making.” (2021).

Galaz, Victor, et al. “Artificial intelligence, systemic risks, and sustainability.” Technology in Society 67 (2021): 101741.

This entry was written by Andrea Rossi, Andrea Visentin and Barry O’Sullivan.