Explainable AI

Contents

Explainable AI#

In Brief#

Explainable AI (often shortened to XAI) is one of the ethical dimensions that is studied in the TAILOR project. The origin of XAI dates back to the entering into force of the General Data Protection Regulation (GDPR). The GDPR [1], in its Recital 71, also mentions the right to explanation, as a suitable safeguard to ensure fair and transparent processing in respect of data subjects. It is defined as the right “to obtain an explanation of the decision reached after profiling”.

More in details#

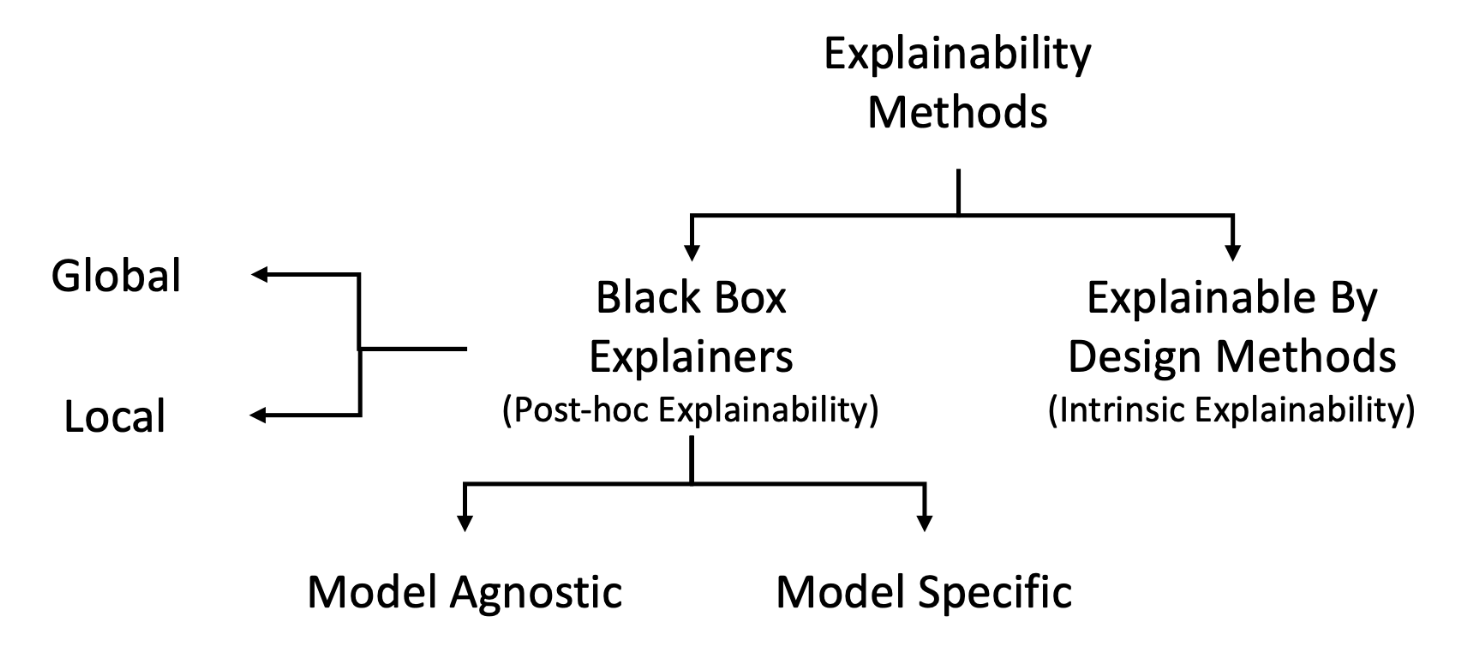

According to the High-Level Expert Group on Artificial Intelligence - Ethics Guidelines for Trustworthy AI, explainability topic is included in the broader transparency dimension. Explainability concerns the ability to explain both the technical processes of an AI system and the related human (e.g., application areas of a system). This aspect is analyzed also in the Black Box Explanation vs Explanation by Design entry.

The goal of this task is explaining how the decision system returned certain outcomes (the so-called Black Box Explanation). Moreover, the Black Box Explanation problem can be further divided among Model Explanation when the explanation involves the whole logic of the obscure classifier, Outcome Explanation when the target is to understand the reasons for the decisions on a given object, and Model Inspection when the target to understand how internally the black box behaves changing the input.

On a different dimension, a lot of effort has been put into defining what are the possible techniques (e.g., we can discriminate between Model-Specific vs Model-Agnostic Explainers), what is the explained outcome (i.e., Global vs Local Explanations), and to understand the Feature Importance. Then, it is important to note that a variety of different kinds of explanations can be provided, such as Single Tree Approximation, Feature Importance, Saliency Maps, Factual and Counterfactual, Exemplars and Counter-Exemplars, and Rules List and Rules Sets.

On a different dimension, a lot of effort has been put into defining what are the possible techniques (e.g., we can discriminate between Model-Specific vs Model-Agnostic Explainers), the requirements to provide good explanations (see guidelines), how to evaluate explanations, and to understand the Feature Importance. Then, it is important to note that a variety of different kinds of explanations can be provided, such as Single Tree Approximation, Feature Importance, Saliency Maps, Factual and Counterfactual, exemplars and counter-exemplars, Rules List and Rules Sets.

Bibliography#

- 1

European Parliament & Council. General data protection regulation. 2016. L119, 4/5/2016, p. 1–88.

- 2

R. Guidotti, A. Monreale, S. Ruggieri, F. Turini, F. Giannotti, and D. Pedreschi. A survey of methods for explaining black box models. ACM computing surveys (CSUR), 2018.

This entry was written by Francesca Pratesi.