Dimensions of Explanations

Contents

Dimensions of Explanations#

In brief#

Dimensions of Explanations are useful to analyze the interpretability of AI systems and to classify the explanation method.

More in detail#

The goal of Explainable AI is to interpret AI reasoning. According to Merriam-webster, to interpret means to give or provide the meaning or to explain and present in understandable terms some concepts. Therefore, in AI, interpretability is defined as the ability to explain or to provide the meaning in understandable terms to a human [2],[3]. These definitions assume that the concepts composing an explanation and expressed in understandable terms are self-contained and do not need further explanations. An explanation is an “interface” between a human and an AI, and it is simultaneously both human understandable and an accurate proxy of the AI.

We can identify a set of dimensions to analyze the interpretability of AI systems that, in turn, reflect on existing different types of explanations [1]. Some of these dimensions are related to functional requirements of explainable artificial intelligence, i.e., requirements that identify the algorithmic adequacy of a particular approach for a specific application, while others to the operational requirements, i.e., requirements that take into consideration how users interact with an explainable system and what is the expectation. Some dimensions instead derive from the need for usability criteria from a user perspective, while others derive from the need for guarantees against any vulnerability issues.

Recently, all these requirements have been analyzed [4] to provide a framework allowing the systematic comparison of explainability methods. In particular, in [4], Sokol and Flach propose Explainability Fact Sheets, which enable researchers and practitioners to assess capabilities and limitations of a particular explainable method. As an example, given an explanation method m, we can consider the following functional requirements.

(i) Even though m is designed to explain regressors, can we use it to explain probabilistic classifiers?

(ii) Can we employ m on categorical features even though it only works on numerical features? On the other hand, as an operational requirement, can we consider which is the function of the explanation? Provide transparency, assess the fairness, etc.

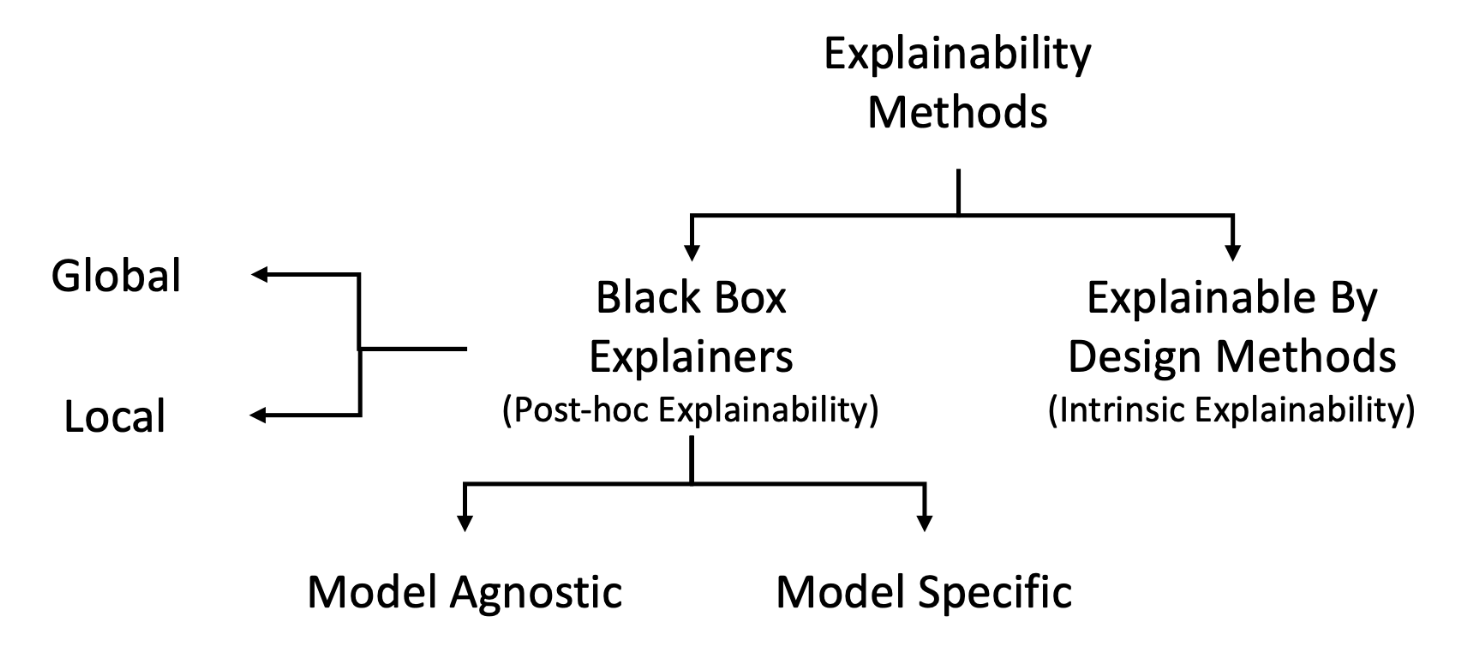

Besides the detailed requirements illustrated in [4], in the literature it is recognized a categorization of explanation methods among fundamental pillars [2],[1]:

Fig. 6 illustrates a summarized ontology of the taxonomy used to classify XAI methods.

Bibliography#

- 1(1,2)

R. Guidotti, A. Monreale, S. Ruggieri, F. Turini, F. Giannotti, and D. Pedreschi. A survey of methods for explaining black box models. ACM computing surveys (CSUR), 2018.

- 2

A. B. Arrieta, N. Díaz-Rodríguez, J. Del Ser, A. Bennetot, S. Tabik, A. Barbado, S. García, S. Gil-López, D. Molina, and R. Benjamins. Explainable artificial intelligence (xai): concepts, taxonomies, opportunities and challenges toward responsible ai. In Information Fusion. 2020.

- 3

Finale Doshi-Velez and Been Kim. Towards a rigorous science of interpretable machine learning. arXiv preprint arXiv:1702.08608, 2017.

- 4(1,2,3)

Kacper Sokol and Peter A. Flach. Explainability fact sheets: a framework for systematic assessment of explainable approaches. In ACM Conference on Fairness, Accountability, and Transparency, 56–67. ACM, 2020.

- 5

Amina Adadi and Mohammed Berrada. Peeking inside the black-box: a survey on explainable artificial intelligence (xai). IEEE Access, 6:52138–52160, 2018.

- 6

Riccardo Guidotti, Anna Monreale, Dino Pedreschi, and Fosca Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing, 2021.

This entry was readapted from Guidotti, Monreale, Pedreschi, Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing (2021) by Francesca Pratesi and Riccardo Guidotti.