Local Rule-based Explanation

Contents

Local Rule-based Explanation#

In Brief#

Local Rule-based Explanation aims to extract a rule that provides a local explaination for a certain instance of the model.

More in details#

Despite being useful, global explanations can be inaccurate because interpreting a whole model can be complex. On the other hand, even though the overall decision boundary is difficult to explain, locally, in the surrounding of a specific instance, it can be easier. Therefore, a local explanation rule can reveal the factual reasons for the decision taken by the black box of an AI system for a specific instance. The LORE method is able to return a local rule-based explanation. LORE builds a local decision tree in the neighborhood of the instance analyzed [4] generated with a genetic procedure to account for both similarity and differences with the instance analyzed.

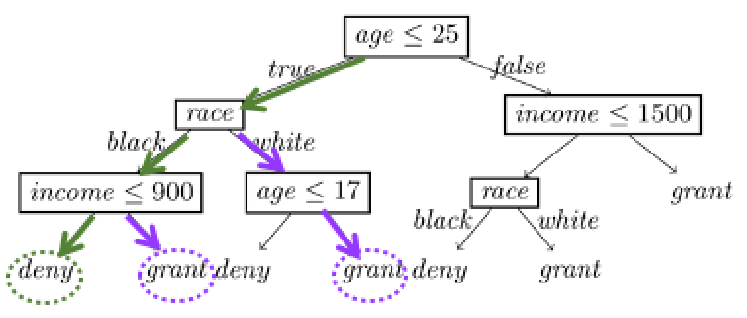

Then, it extracts from the tree a local rule revealing the reasons for the decision on the specific instance (see the green path in Figure 16). For instance, the explanation of LORE for the denied request of a loan from a customer with

”age=22, race=black, and income=800”

to a bank that uses an AI could be the factual rule

if age ≤ 25 and race = black and income ≤ 900 then deny.

anchor [5] is another XAI approach for locally explaining black box models with decision rules called anchors. An anchor is a set of features with thresholds indicating the values which are fundamental for obtaining a certain decision of the AI. anchor efficiently explores the black box behavior by generating random instances exploiting a multi-armed bandit formulation.

Bibliography#

- 1

Riccardo Guidotti, Anna Monreale, Dino Pedreschi, and Fosca Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing, 2021.

- 2

Riccardo Guidotti, Anna Monreale, Fosca Giannotti, Dino Pedreschi, Salvatore Ruggieri, and Franco Turini. Factual and counterfactual explanations for black box decision making. IEEE Intelligent Systems, 34(6):14–23, 2019.

- 3

Marco Tulio Ribeiro, Sameer Singh, and Carlos Guestrin. Anchors: high-precision model-agnostic explanations. In Thirty-Second AAAI Conference on Artificial Intelligence. 2018.

This entry was readapted from Guidotti, Monreale, Pedreschi, Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing (2021) by Francesca Pratesi.