Rules List and Rules Set

Contents

Rules List and Rules Set#

In Brief#

A decision rule is generally formed by a set of conditions and by a consequent, e.g., if conditions, then consequent.

More in details#

Given a record, a decision rule assigns to the record the outcome specified in the consequent if the conditions are verified [2]. The most common rules are if-then rules that take into consideration rules with clauses in conjunction. On the other hand, for m-of-n rules given a set of n conditions, if m of them are verified, then the consequence of the rule is applied [3]. When a set of rules is used, then there are different strategies to select the outcome.

For list of rules the order of the list is considered and the model returns the outcome of the first rule that verifies the conditions []. For instance, falling rule lists are if-then rules ordered with respect to the probability of a specific outcome []. On the other hand, decision sets are unordered lists of rules. Basically each rule is an independent classifier that can assign its outcome without regard for any other rules [4]. Voting strategies are used to select the final outcome.

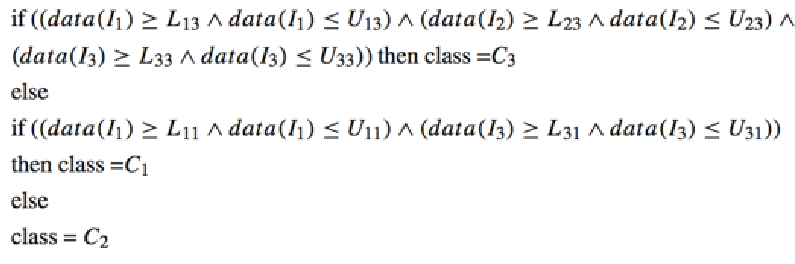

List of rules and set of rules are adopted as explanation both from explanation methods but also from transparent classifiers. In both cases the reference context is tabular data. In [5] the explanation method rxren unveil with rules list the logic behind a trained neural network. First, rxren prunes the insignificant input neurons and identifies the data range necessary to classify the given test instance with a specific class. Second, rxren generates the classification rules for each class label exploiting the data ranges previously identified, and improve the initial list of rules by a process that prunes and updates the rules. Figure 18 shows an example of rules returned by rxren. A survey on techniques extracting rules from neural networks is [6]. All the approaches in [6], including rxren are model-specific explainers.

As previously mentioned, an alternative line of research to black box explanation is the design of transparent models for the AI systems. The corels method [7] is a technique for building rule lists for discretized tabular datasets. corels provides an optimal and certifiable solution in terms of rule lists. An example of rules list returned by corels is reported in Figure {number}. The rules are read one after the other, and the AI would take the decision of the first rule for which the conditions are verified. Decision sets are built by the method presented in [4]. The if-then rules extracted for each set are accurate, non-overlapping, and independent. Since each rule is independently applicable, decision sets are simple, succinct, and easily to be interpreted. A decision set is extracted by jointly maximizing the interpretability and predictive accuracy by means of a two step approach using frequent itemset mining and a learning method to select the rules. The method proposed in [8] merges local explanation rules into a unique global weighted rule list by using a scoring system.

Bibliography#

- 1

Riccardo Guidotti, Anna Monreale, Dino Pedreschi, and Fosca Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing, 2021.

- 2

R. Agrawal and R. Srikant. Fast algorithms for mining association rules. In International Conference Very Large Data Bases (VLDB), volume 1215, 487–499. 1994.

- 3

Patrick M Murphy and Michael J Pazzani. ID2-of-3: Constructive induction of M-of-N concepts for discriminators in decision trees. In Machine Learning Proceedings 1991, pages 183–187. Elsevier, 1991.

- 4(1,2)

Himabindu Lakkaraju, Stephen H Bach, and Jure Leskovec. Interpretable decision sets: a joint framework for description and prediction. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 1675–1684. ACM, 2016.

- 5

M. G. Augasta and T. Kathirvalavakumar. Reverse engineering the neural networks for rule extraction in classification problems. Neural processing letters, 2012.

- 6(1,2)

R. Andrews, J. Diederich, and A. B. Tickle. Survey and critique of techniques for extracting rules from trained artificial neural networks. Knowledge-based systems, 1995.

- 7

E. Angelino, N. Larus-Stone, D. Alabi, M. Seltzer, and C. Rudin. Learning certifiably optimal rule lists. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2017.

- 8

M. Setzu, R. Guidotti, A. Monreale, and F. Turini. Global explanations with local scoring. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 159–171. 2019.

This entry was readapted from Guidotti, Monreale, Pedreschi, Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing (2021) by Francesca Pratesi.