Single Tree Approximation

Contents

Single Tree Approximation#

In brief#

The single tree appoximation is an approach that aims at building a decision tree to approximate the behavior of a black box, typically a neural network.

More in detail#

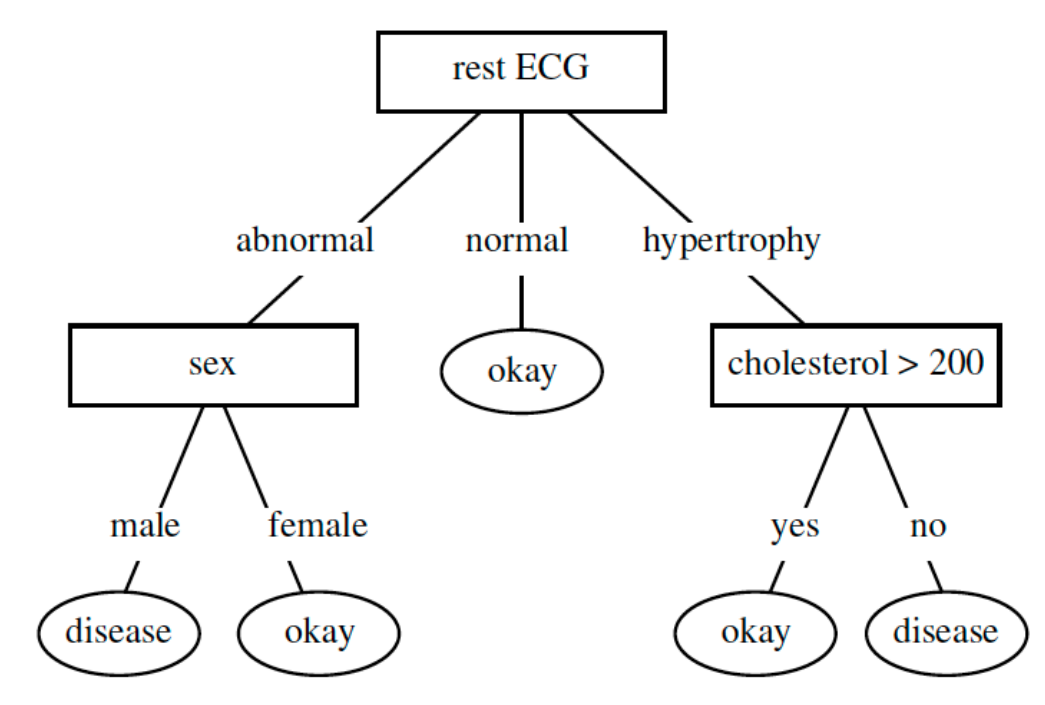

Decision trees are the simplest example of transparent techniques. Moreover, they can be built to provide post-hoc explanations of black-boxes. One of the first approaches introduced to explain neural networks is trepan [2]. trepan is a global explanation method that is able to model the whole logic of a neural network working on tabular data with a single decision tree [3]. The decision tree returned by trepan as an explanation is a global transparent surrogate. Indeed, every path from the root of the tree to a leaf explains the reasons for the final decision that is reported in the leaf itself. An example of a decision tree returned by trepan is illustrated in Fig. 6.

This global explanation reveals that the black box first focuses on the value of the feature rest ECG; Depending on its degree (abnormal, normal, hypertrophy), tha black box takes different decisions depending on additional factors such as sex or cholesterol. In particular, trepan queries the neural network to induce the decision tree by maximizing the gain ratio [3] on the data with respect to the neural network’s predictions.

A weakness of common decision trees like ID3 or C4.5 [4] is that the amount of data to find the splits near to the leaves is much lower than those used initially. Thus, in order to retrieve how a neural network works in detail, trepan adopts a synthetic generation of data that respects the path of each node before performing the splitting such that the same amount of data is used for every split. In addition, it allows flexibility by using “m-of-n” rules where only m conditions out of n are required to be satisfied to classify a record. Therefore, trepan maximizes the fidelity of the single tree explanation with respect to the black box decision.

It is worth noting that, even though trepan is proposed to explain neural networks, in reality it is model-agnostic because it does not exploit any internal characteristic of neural networks to retrieve the explanation tree. Moreover, it does not place any requirements on either the architecture of the network or its training method. Thus, it can be theoretically employed to provide explanations to every kind of classifier.

In [5] is presented an extension of trepan that aims to keep the tree explanation simple and compact by introducing four splitting approaches in order to find the most relevant features during the tree construction. In [6], genetic programming is used for building a single decision tree that approximates the behavior of a neural network ensemble by considering additional genetic features obtained as combinations of the original data and the novel data annotated by the black box models. Both methods described in [5], [6], like trepan, return explanations in the form of a global decision tree.

Bibliography#

- 1

Riccardo Guidotti, Anna Monreale, Dino Pedreschi, and Fosca Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing, 2021.

- 2

M. Craven and J. W. Shavlik. Extracting tree-structured representations of trained networks. In Advances in neural information processing systems, volume 8, 24–30. 1996.

- 3(1,2)

Pang-Ning Tan, Michael Steinbach, and Vipin Kumar. Introduction to data mining. Pearson Addison-Wesley, 2006.

- 4

J Ross Quinlan. C4.5: Programs for Machine Learning. Elsevier, 1993.

- 5(1,2)

Olcay Boz. Extracting decision trees from trained neural networks. In Proceedings of the eighth ACM SIGKDD international conference on Knowledge discovery and data mining, 456–461. 2002.

- 6(1,2)

Ulf Johansson and Lars Niklasson. Evolving decision trees using oracle guides. In 2009 IEEE Symposium on Computational Intelligence and Data Mining, 238–244. IEEE, 2009.

This entry was readapted from Guidotti, Monreale, Pedreschi, Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing (2021) by Francesca Pratesi and Riccardo Guidotti.