Counterfactuals

Contents

Counterfactuals#

In Brief#

A counterfactual explanation shows what should have been different to change the decision of an AI system. For example, a counterfactual explanation could be a local explaination of a certain istance by providing the nearest istances that lead to a different decision or describing a small change in the input of the model that lead to a change in the outcome of the model.

More in details#

A counterfactual explanation shows what should have been different to change the decision of an AI system. Counterfactual explanations are becoming an essential component in XAI methods and applications [2] because they help people in reasoing on the cause-effect relations between analyzed instances and AI decision [3]. Indeed, while direct explanations such as features importance, decision rules, and saliency maps are important for understanding the reasons for a certain decision, a counterfactual explanation reveals what should be different in a given instance to obtain an alternative decision [4]. Thinking in terms of “counterfactuals” requires the ability to figure a hypothetical causal situation that contradicts the observed one [2]. The “cause” of the situation are the features describing the under investigation and that “caused” a certain decision, the “event” models the decision.

The most used types of counterfactual explanations are indeed prototype-based counterfactuals. In [6], counterfactual explanations are provided by an explanation method that solves an optimization problem that given an instance under analysis, a training dataset, and a black box function, returns an instance similar to the input one and with minimum changes, i.e., minimum distance, but that revert the black box outcome. The counterfactual explanation describes the smallest change that can be made in that particular case to obtain a different decision from the AI. In [7] the generation of diverse counterfactuals using mixed integer programming for linear models is proposed. As previously mentioned, ABELE [3] also returns synthetic counter-exemplar images that highlights the similarities and differences between images leading to the same decision and images leading to other decisions.

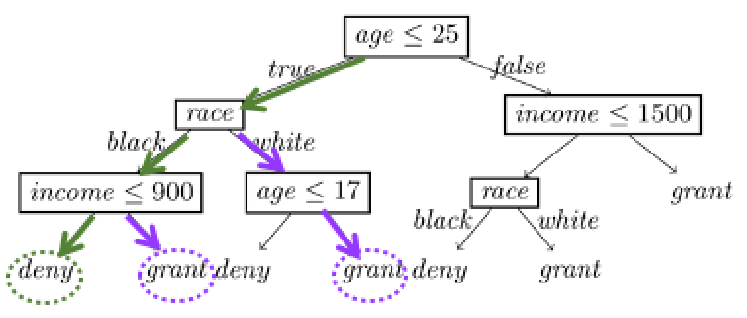

Another modeling for counterfactual explanations consists of the logical form that describes a causal situation like: “If X had not occurred, Y would not have occurred” [2]. The local model-agnostic LORE explanation method [4], besides a factual explanation rule, also provides a set of counterfactual rules that illustrate tho logic used by the AI to obtain a different decision with minimum changes. For example, in Figure 16, the set of counterfactual rules is highlighted in purple and shows that if income > 900 then grant, or if race = white then grant, clarifying which particular changes would revert the decision. In [10], authors proposed a local neighborhood generation method based on a Growing Spheres algorithm that can be used for both finding counterfactual instances, but also as a base for extracting counterfactual rules.

Bibliography#

- 1

Riccardo Guidotti, Anna Monreale, Dino Pedreschi, and Fosca Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing, 2021.

- 2

A. Apicella, F. Isgr`o, R. Prevete, and G. Tamburrini. Contrastive explanations to classification systems using sparse dictionaries. In International Conference on Image Analysis and Processing, 207–218. 2019.

- 3

Ruth MJ Byrne. Counterfactuals in explainable artificial intelligence (xai): evidence from human reasoning. In IJCAI, 6276–6282. 2019.

- 4

Sandra Wachter, Brent Mittelstadt, and Luciano Floridi. Why a right to explanation of automated decision-making does not exist in the General Data Protection Regulation. International Data Privacy Law, 7(2):76–99, 2017.

- 5(1,2)

Christoph Molnar. Interpretable Machine Learning. Lulu. com, 2020.

- 6

Sandra Wachter, Brent Mittelstadt, and Chris Russell. Counterfactual explanations without opening the black box: automated decisions and the gdpr. HJLT, 31:841, 2017.

- 7

Berk Ustun, Alexander Spangher, and Yang Liu. Actionable recourse in linear classification. In Proceedings of the Conference on Fairness, Accountability, and Transparency, 10–19. 2019.

- 8

Riccardo Guidotti, Anna Monreale, Stan Matwin, and Dino Pedreschi. Black box explanation by learning image exemplars in the latent feature space. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 189–205. Springer, 2019.

- 9

Riccardo Guidotti, Anna Monreale, Fosca Giannotti, Dino Pedreschi, Salvatore Ruggieri, and Franco Turini. Factual and counterfactual explanations for black box decision making. IEEE Intelligent Systems, 34(6):14–23, 2019.

- 10

Thibault Laugel, Marie-Jeanne Lesot, Christophe Marsala, Xavier Renard, and Marcin Detyniecki. Inverse classification for comparison-based interpretability in machine learning. arXiv preprint arXiv:1712.08443, 2017.

This entry was readapted from Guidotti, Monreale, Pedreschi, Giannotti. Principles of Explainable Artificial Intelligence. Springer International Publishing (2021) by Francesca Pratesi.